The increasing complexity of graphics processors provides a barrier to entry for most potential rivals, as it would be challenging to match Nvidia’s large R&D budget. The firm has a first-mover advantage in the autonomous driving market that could lead to widespread adoption of its self-driving platform. The ongoing expansion of artificial intelligence and deep learning that rely on Nvidia ’s graphics chips presented the firm with a potentially massive growth opportunity.

PC gaming enthusiasts generally purchase high-end discrete GPUs offered by the likes of Nvidia and AMD. This involves large swaths of data followed by techniques that develop algorithms to produce conclusions in the same way as humans. Today’s basic variants of AI are consumer-based digital assistants, image recognition, and natural language processing.

Nvidia’s DRIVE PX platform is a deep learning tool for autonomous driving. It is being used in research and development at more than 370 partners. The company views the car as a supercomputer on wheels, although this segment currently contributes relatively little to the top line. Nvidia has patents related to the hardware design of its GPUs in addition to the software and frameworks used to take advantage of GPUs in gaming, design, visualization, and other graphics-intensive applications. The latest pc games typically require system software updates that optimize the performance of GPUs.

Nvidia has a cost-leadership advantage and intangible assets related to the design of graphics processing units (GPUs). The firm is the originator of and leader in discrete graphics, having captured the lion’s share of the market from longtime rival AMD. The market has significant barriers to entry in the form of advanced intellectual property, as even chip leader intel was unable to develop its own GPUs despite its vast resources and ultimately needed to license IP from Nvidia to integrate GPUs into its PC chipsets. Nvidia has gained share at the expense of AMD as gamers have moved from mainstream graphics cards to performance and enthusiast segments. These GPUs range from $150 at the low-end to over $1,000 for premium cards, with Nvidia’s gaming gross margins in the high 50s.

Web behemoths such as Google, Facebook, Amazon, and Microsoft have found GPUs to be adept at accelerating cloud workloads. To train a computer to recognize spoken words or images, it must be exposed to massive amounts of data to educate itself. Conclusions involve taking what the model learned during the training process and putting it into real-world applications to make decisions. The training process is ideal for GPUs that have massively parallel architecture.

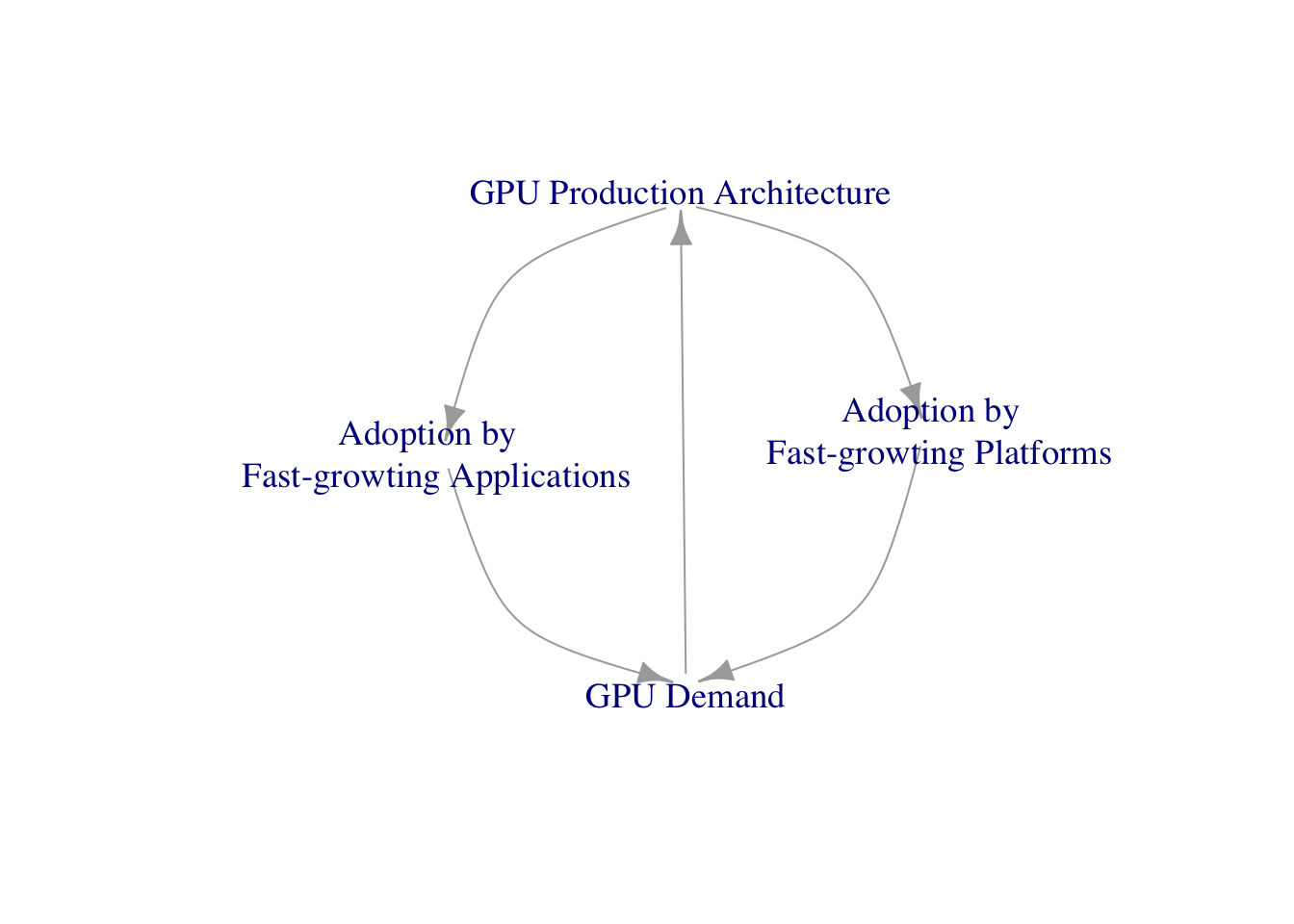

Nvidia has become a key player in the artificial intelligence accelerator market with its GPUs for AI training and possibility workloads. The firm launched its latest A100 data center GPUs. The A100 boasts impressive performance enhancements from its predecessor (V100). Nvidia is the sole beneficiary of the burgeoning ai and self-driving trends. Data center revenue grew considerably, as customers leverage both Nvidia’s training and key AI applications such as natural language processing (NLP).